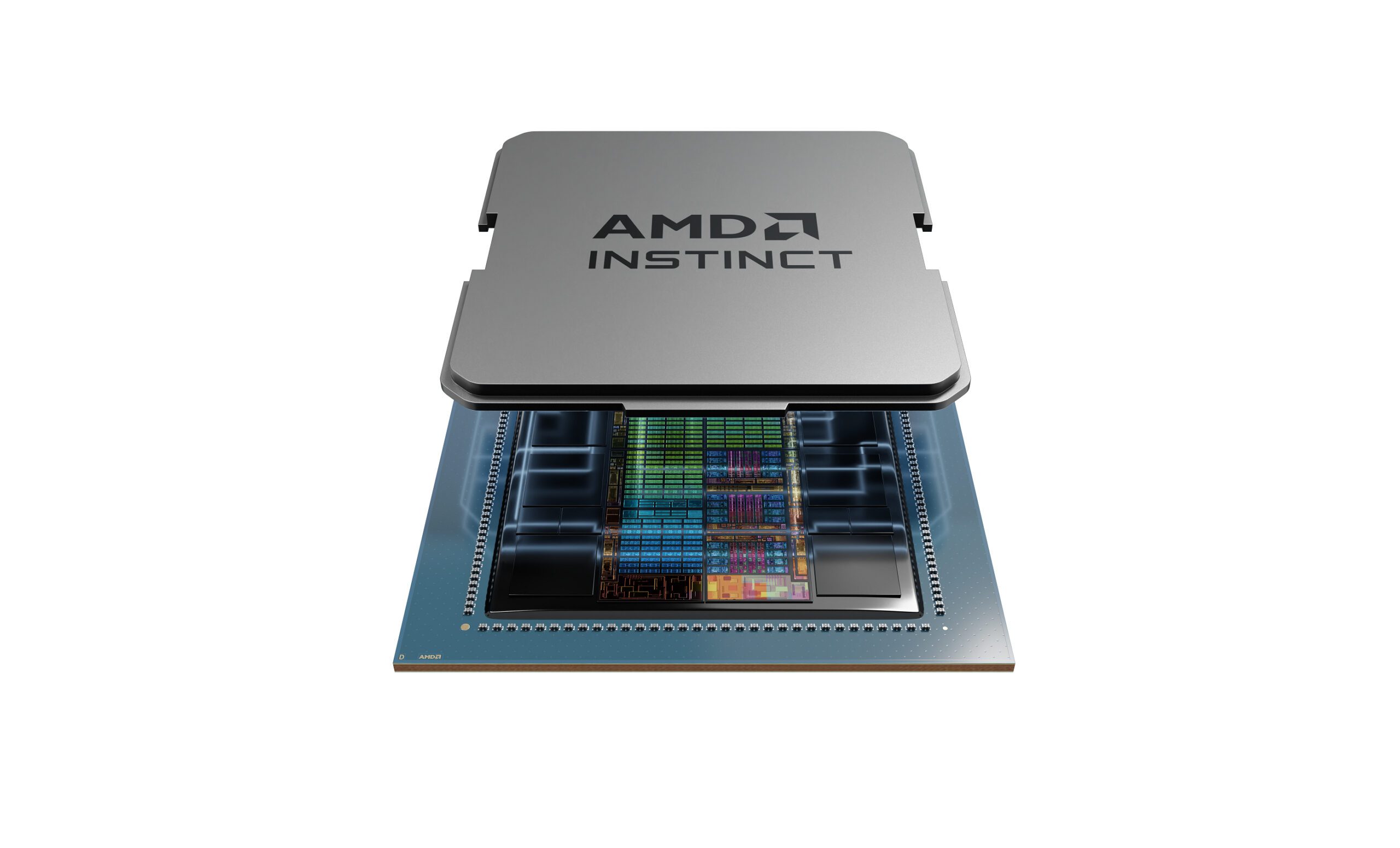

Customers like Fireworks AI are leveraging new OCI Compute instances to enhance their AI inference and training capabilities. The OCI Supercluster stands out among cloud providers, supporting up to 16,384 AMD Instinct MI300X GPUs within a single ultrafast network fabric.

SANTA CLARA, Calif. – Sept 28, 2024 – AMD (NASDAQ: AMD) has announced that Oracle Cloud Infrastructure (OCI) is incorporating AMD Instinct™ MI300X accelerators with ROCm™ open software into its latest OCI Compute Supercluster instance, the BM.GPU.MI300X. This configuration is designed for AI models featuring hundreds of billions of parameters, supporting up to 16,384 GPUs in a single cluster through the advanced ultrafast network fabric technology utilized by other OCI accelerators. These bare metal instances are tailored for demanding AI workloads, including large language model (LLM) inference and training, offering high throughput along with exceptional memory capacity and bandwidth. Companies such as Fireworks AI have already begun using these powerful instances.

“AMD Instinct MI300X and ROCm open software are increasingly recognized as reliable solutions for critical OCI AI workloads,” said Andrew Dieckmann, Corporate Vice President and General Manager of AMD’s Data Center GPU Business. “As we continue to expand into rapidly growing AI markets, this combination will deliver high performance, efficiency, and enhanced system design flexibility for OCI customers.”

“The AMD Instinct MI300X accelerators enhance OCI’s diverse range of high-performance bare metal instances, eliminating the virtualization overhead typically associated with AI infrastructure,” stated Donald Lu, Senior Vice President of Software Development at Oracle Cloud Infrastructure. “We are thrilled to provide customers with more options to accelerate their AI workloads competitively.”

Delivering Trusted Performance and Flexibility for AI Training and Inference

Extensive testing by OCI has validated the AMD Instinct MI300X’s capabilities for AI inference and training, demonstrating optimal latency for large batch sizes and the ability to accommodate the largest LLM models within a single node. These performance outcomes have caught the attention of AI model developers.

Fireworks AI, a fast-growing platform for building and deploying generative AI, is harnessing the performance benefits of OCI with the AMD Instinct MI300X. With over 100 models, Fireworks AI is effectively scaling its services to meet customer demands.

“Fireworks AI empowers enterprises to develop and deploy complex AI systems across diverse industries,” said Lin Qiao, CEO of Fireworks AI. “The memory capacity offered by the AMD Instinct MI300X and ROCm open software enables us to scale our services as models continue to evolve.”

Let me know if you need any further modifications!